After the release of the child safety feature ‘Communication safety in Messages‘ in the United States, Apple is planning to expand it to Canada and the United Kingdom (UK).

In August 2021, Apple announced new ‘Expanded Protections for Children‘ features to prevent the spread of Child Sexual Abuse Material (CSAM) which included Communication safety in Messages, Detection of CSAM, and Expanded guidance in Siri, Spotlight, and Safari Search.

However, Apple received a lot of backlash from cybersecurity experts who feared that the scanning technology introduced in the Expanded Protections for Children features to detect CSAM would open a pandora’s box of relentless surveillance and censorship of citizens, especially by authoritarian regimes to suppress dissidents and rival politicians.

Therefore, the company delayed the features to consult with child safety and privacy experts and finally released Communication safety in Messages and Expanded guidance in Siri, Spotlight, and Safari Search in iOS 15.2, iPadOS 15.2, watchOS 8.3, and macOS 12.1 to help keep children safe in the United States.

Nudity detection child safety feature “Communication safety in Messages” is coming to Canada and UK

The Guardian reports that the child safety feature to detect nudity or CSAM sent or received in Messages will be released in the UK.

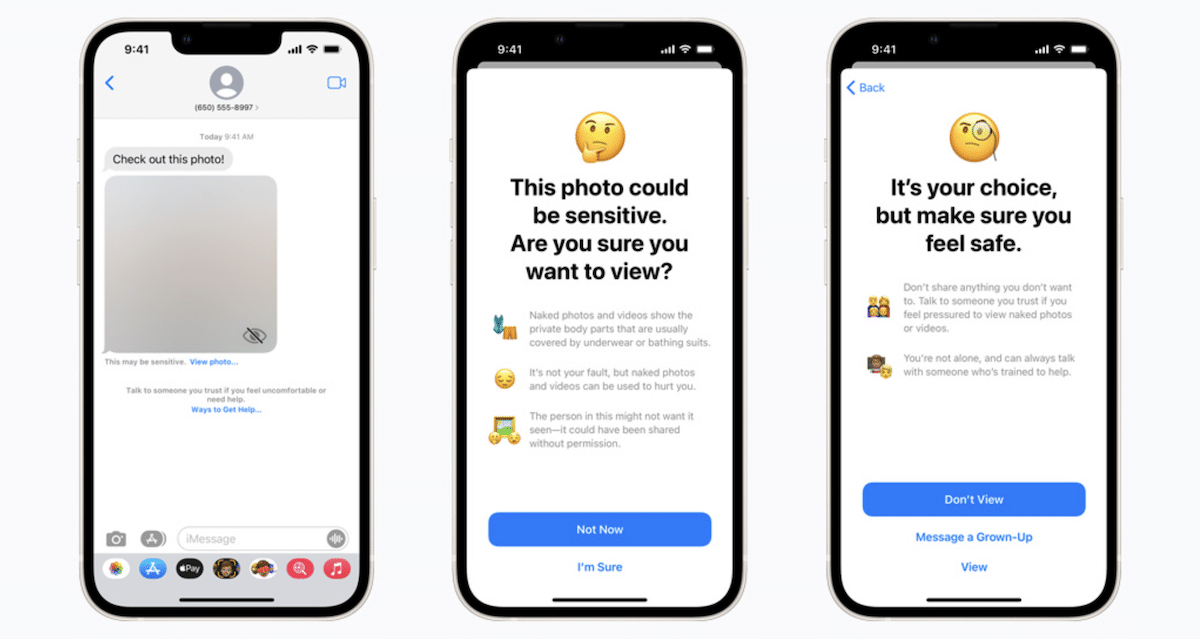

Communication safety in Messages is an opt-in feature that uses AI technology to scan the contents of the Messages app for CSAM or nudity and show children warning alerts prior to viewing such content when enabled. The feature requires a Family Sharing plan.

Furthermore, developer @Rene Ritchie shared that Apple will soon launch the Communication Safety in Messages and Expanded guidance in Siri, Spotlight, and Search in Canada.

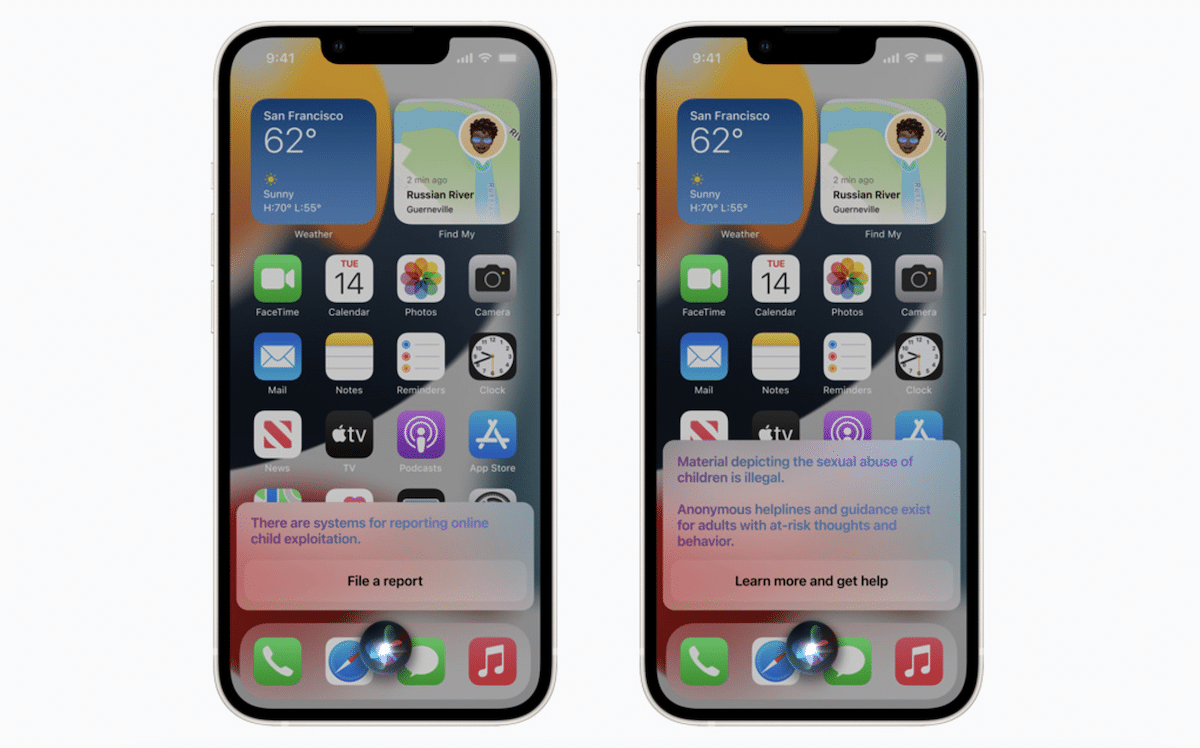

Like the child safety feature for Messages, Apple’s ‘Expanded guidance in Siri, Spotlight, and Safari Search’ feature is designed to intervene when users search for CSAM and provide parents and children with resources or guidance on how to report CSAM.

The third “Detection CSAM” feature part of the Expanded Protections for Children has not been released yet. The feature is designed to scan and detect CSAM images stored in iCloud Photos which has received the most criticism for turning iPhones into surveillance devices.

To detect CSAM images, Apple’s new system will carry out on-device matching based on the database of known CSAM image hashes provided by NCMEC and other child safety organizations. The database will be stored on users’ devices as an unreadable set of hashes.

Read More: