Child safety has always been a critical concern in the tech industry, particularly when it comes to preventing the circulation of Child Sexual Abuse Material (CSAM). Apple’s attempts to address this issue through on-device and iCloud CSAM detection tools took a controversial turn when it was first announced.

Apple’s decision to abandon CSAM detection tools in December 2022 has raised questions, and a child safety group called the Heat Initiative has been at the forefront of pushing Apple for answers. In response, the tech giant has provided its most detailed explanation yet for the abandonment of its CSAM detection plans.

Apple rejected CSAM detection for child safety and user privacy

Child Sexual Abuse Material remains a deeply troubling issue that requires proactive solutions. Apple initially introduced the concept of CSAM detection tools in an effort to combat the spread of this harmful content. However, the company faced considerable backlash and ethical concerns about user privacy and security. In the wake of this controversy, the Heat Initiative took a strong stance against Apple’s decision and threatened to organize a campaign against it.

In a comprehensive response to the Heat Initiative (via WIRED), Erik Neuenschwander, Apple’s director of user privacy and child safety, emphasized that the decision to abandon CSAM detection was not taken lightly. He outlined the company’s commitment to consumer safety and privacy as the driving force behind the pivot toward a feature set known as Communication Safety. Neuenschwander explained that attempting to access encrypted information for CSAM detection would conflict with Apple’s broader privacy and security principles, which have been at odds with various governmental demands.

Apple’s response underscored its unwavering stance against compromising user privacy and security. Neuenschwander pointed out that widespread scanning of privately stored iCloud data would introduce new vulnerabilities for potential data breaches. Moreover, it would set a dangerous precedent that could lead to bulk surveillance and unintended consequences, eroding the fundamental principles that Apple Is lauded for.

Neuenschwander’s response directly addressed Sarah Gardner, the leader of the Heat Initiative, who had expressed disappointment in Apple’s decision to abandon CSAM detection. Gardner believed that the proposed solution would have positioned Apple as a leader in user privacy while eradicating a significant amount of CSAM from iCloud. In her message, she conveyed the urgency of implementing critical technology to combat child sexual abuse.

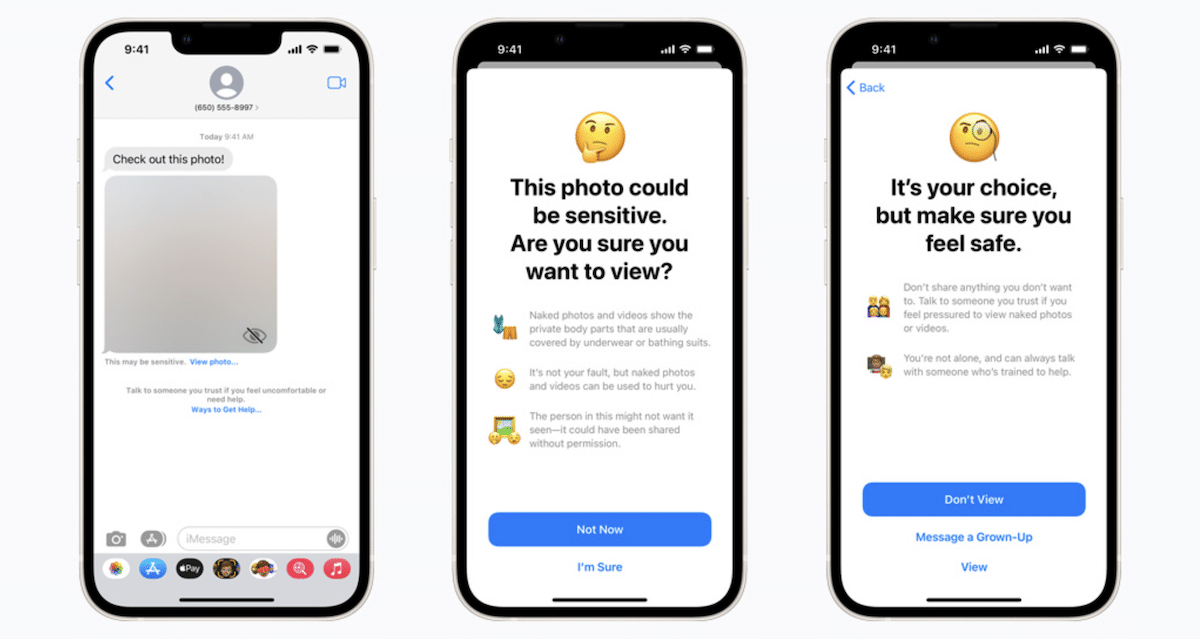

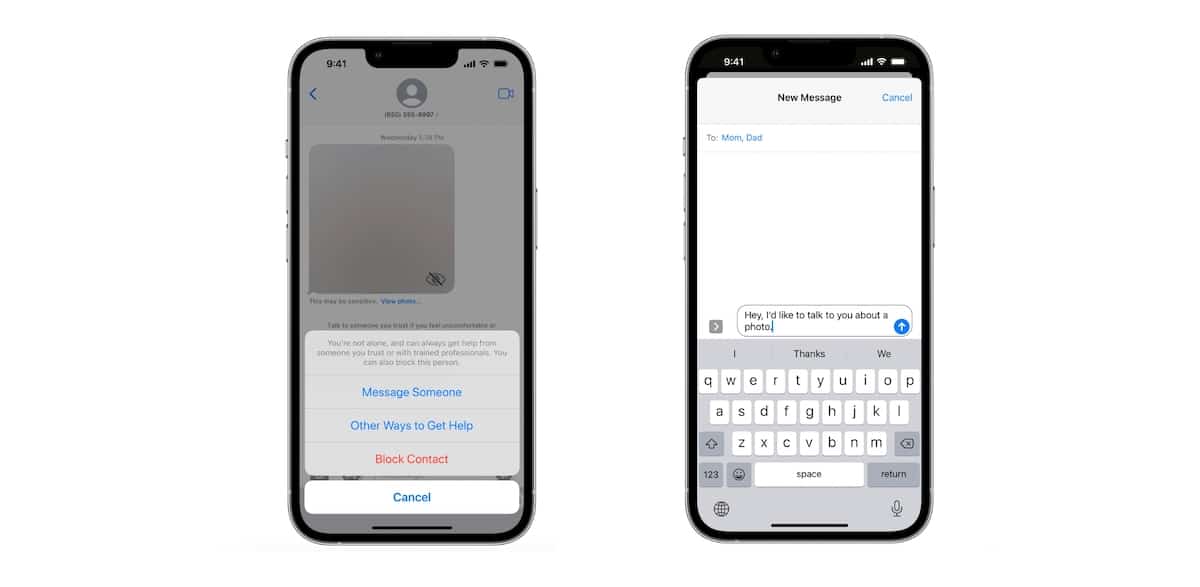

Apple’s approach, rather than acting as an intermediary for processing reports, focuses on guiding victims toward appropriate resources and law enforcement agencies. The company is developing APIs that third-party apps can use to educate users and facilitate reporting of offenders. Apple has also introduced the Communication Safety feature, which aids users in filtering out explicit content from their messages. While this feature was initially available for children’s accounts, it’s set to expand to adults in iOS 17, with plans for further expansion in the future.

It’s essential to note that Apple’s decision not to proceed with CSAM detection was driven by the company’s unyielding commitment to user privacy and security. The response to the Heat Initiative clarifies the concerns and considerations that led to this pivotal decision. While addressing child sexual abuse remains a crucial issue, Apple’s approach aims to strike a balance between safeguarding user data and aiding the fight against CSAM.