More than 90 civil rights groups have asked Apple to abandon its CSAM plan in an open letter. In addition to this, the groups would also like the Cupertino tech giant to drop its plan to analyze iMessage for sexually explicit images as it could pose a threat to the safety of young gay people.

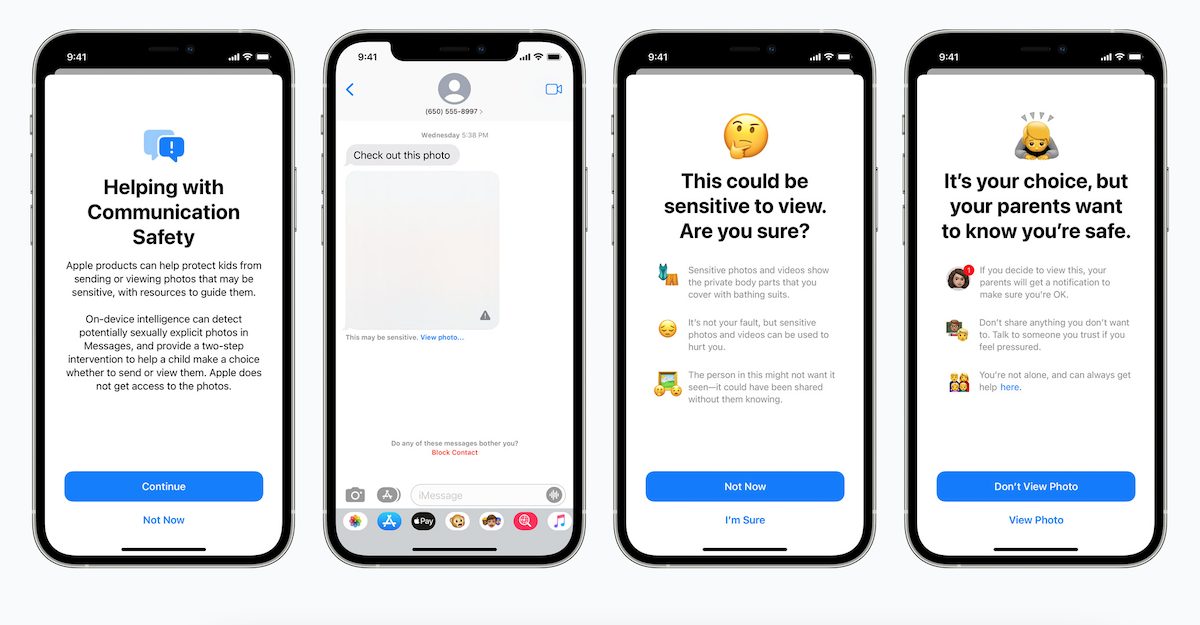

Apple this month announced new Child Safety features which give the company power to analyze iMessages for sexually explicit images, scan iCloud Photos to detect CSAM content, and update Siri and Search to warn, intervene and guide children when searching for CSAM related topics. The new child safety features will come out in the Fall in iOS 15, iPadOS 15, watchOS 8, and macOS Monterey updates.

Over 90 civil rights groups ask Apple to abandon its CSAM plan

Since the Cupertino tech giant announced its new Child Safety measures, many companies, technologists, academics, and policy advocates have come out against the plan. Now, over 90 civil rights groups have asked Apple to abandon its CSAM plan over concerns that it will be used ” to censor protected speech, threaten the privacy and security of people around the world, and have disastrous consequences for many children.”

The civil rights groups that addressed Apple in an open letter include the American Civil Liberties Union (ACLU), the Canadian Civil Liberties Association, Australia’s Digital Rights Watch, the UK’s Liberty, and the global Privacy International. The letter highlights how the company’s child protection features may be misused by governments around the globe.

Once this capability is built into Apple products, the company and its competitors will face enormous pressure – and potentially legal requirements – from governments around the world to scan photos not just for CSAM, but also for other images a government finds objectionable.

Those images may be of human rights abuses, political protests, images companies have tagged as “terrorist” or violent extremist content, or even unflattering images of the very politicians who will pressure the company to scan for them.

And that pressure could extend to all images stored on the device, not just those uploaded to iCloud. Thus, Apple will have laid the foundation for censorship, surveillance, and persecution on a global basis.

The groups have urged the tech giant to “abandon those changes and to reaffirm the company’s commitment to protecting its users with end-to-end encryption” and “to more regularly consult with civil society groups, and with vulnerable communities who may be disproportionately impacted by changes to its products and services.”

Apple has not responded to the letter as of now however it has previously detailed the security measures it has implemented to prevent the misuse of CSAM detection and Communication Safety in Messages.

Read more:

- The new “Corellium Open Security Initiative” aims to test Apple’s CSAM related privacy and security claims

- Developer claims to have reverse-engineered Apple’s CSAM detection algorithm from iOS 14.3 [U: Apple’s response]

- German journalists appeal to EU Commission to stand against Apple’s CSAM detection

2 comments