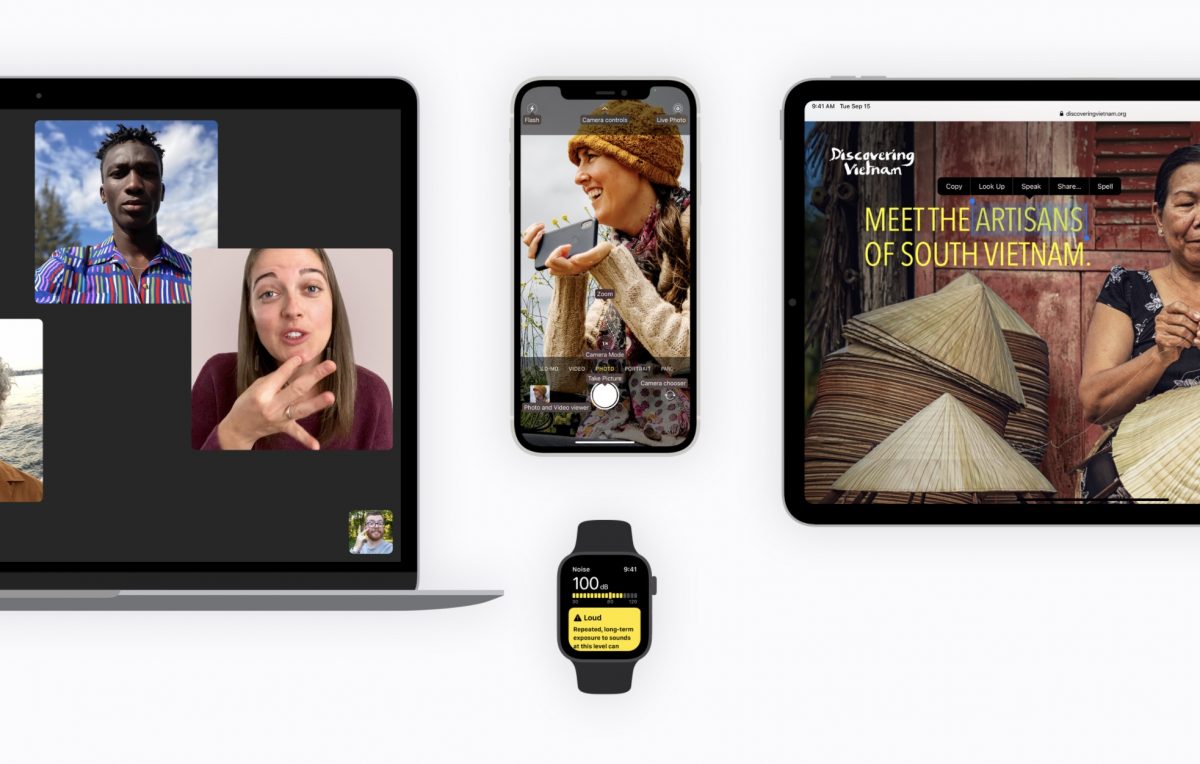

Apple has announced new accessibility features for users with visual and hearing disabilities on Apple Watch, iPhone, and iPad. Rolling out later this year, users with limb disabilities will be able to use Apple Watch with hand gestures, the iPad will get eye-tracking sensors for users with low-vision or blindness, VoiceOver will get smart, and more.

Accessibility is woven into Apple’s entire ecosystem. The company offers several features like VoiceOver, Screen recognition that make it convenient for people with special needs to not only use devices and complete tasks but it gives them the confidence to be productive members. In an article shared on the 30th anniversary of ADA law, activists and creators with disabilities shared how Apple’s accessibility features helped them become musicians, lawyers, and more.

Apple’s new accessibility features coming out later this year on Apple Watch, iPad, and iPhone

- Assistive Touch on Apple Watch will allow users with limited upper body movement to control their smartwatch without touching the screen. Various sensors in Apple Watch will enable them to navigate through different hand gestures including clench or pinch combinations to “easily answer incoming calls, control an onscreen motion pointer, and access Notification Center, Control Center, and more.”

- Support for Eye-Tracking devices on iPadOS will make it possible for users to control the tablet with their eyes. Third-party and Made for iPhone (MFi) devices will track users’ gaze and will move the pointer accordingly, “while extended eye contact performs an action, like a tap.”

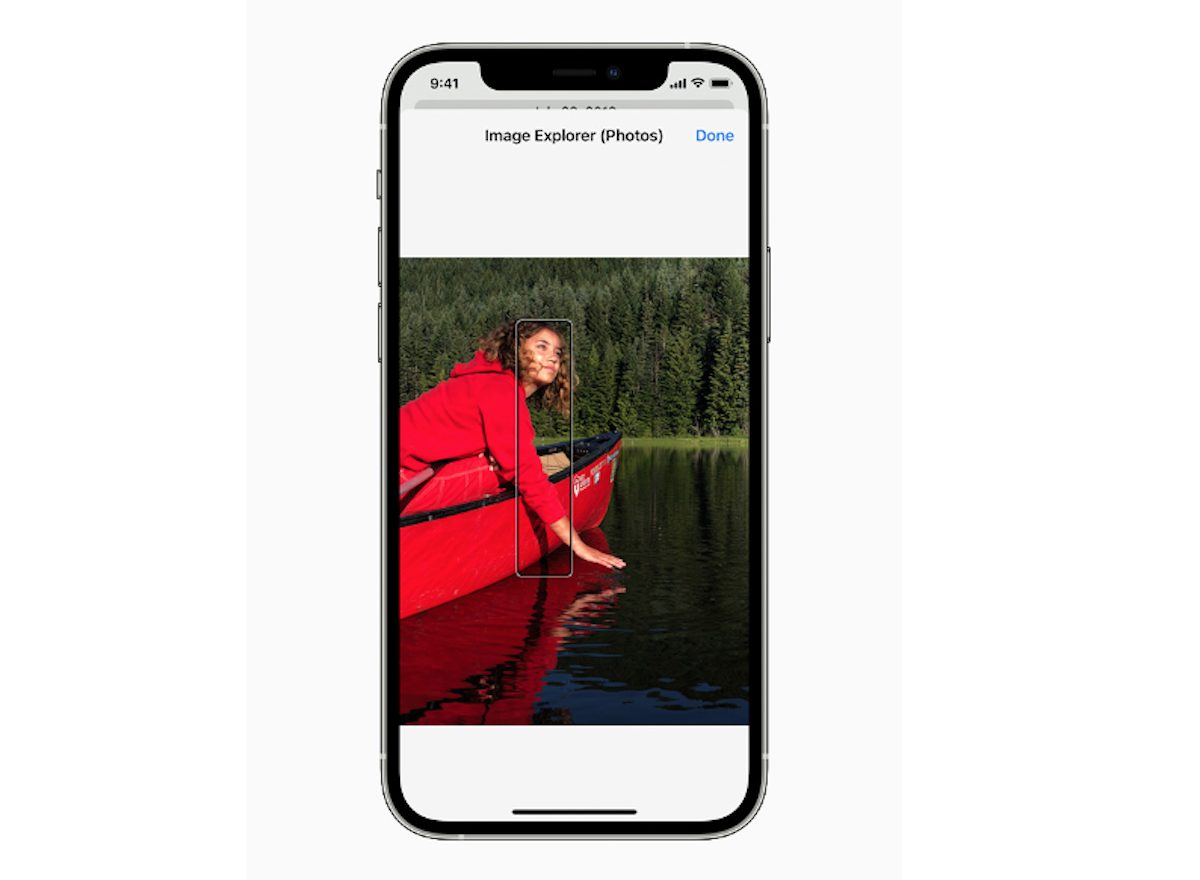

- New VoiceOver features for images will allow users with low vision or blindness to explore more details like people, text, and more. “Users can navigate a photo of a receipt like a table: by row and column, complete with table headers. VoiceOver can also describe a person’s position along with other objects within images — so people can relive memories in detail, and with Markup, users can add their own image descriptions to personalize family photos.”

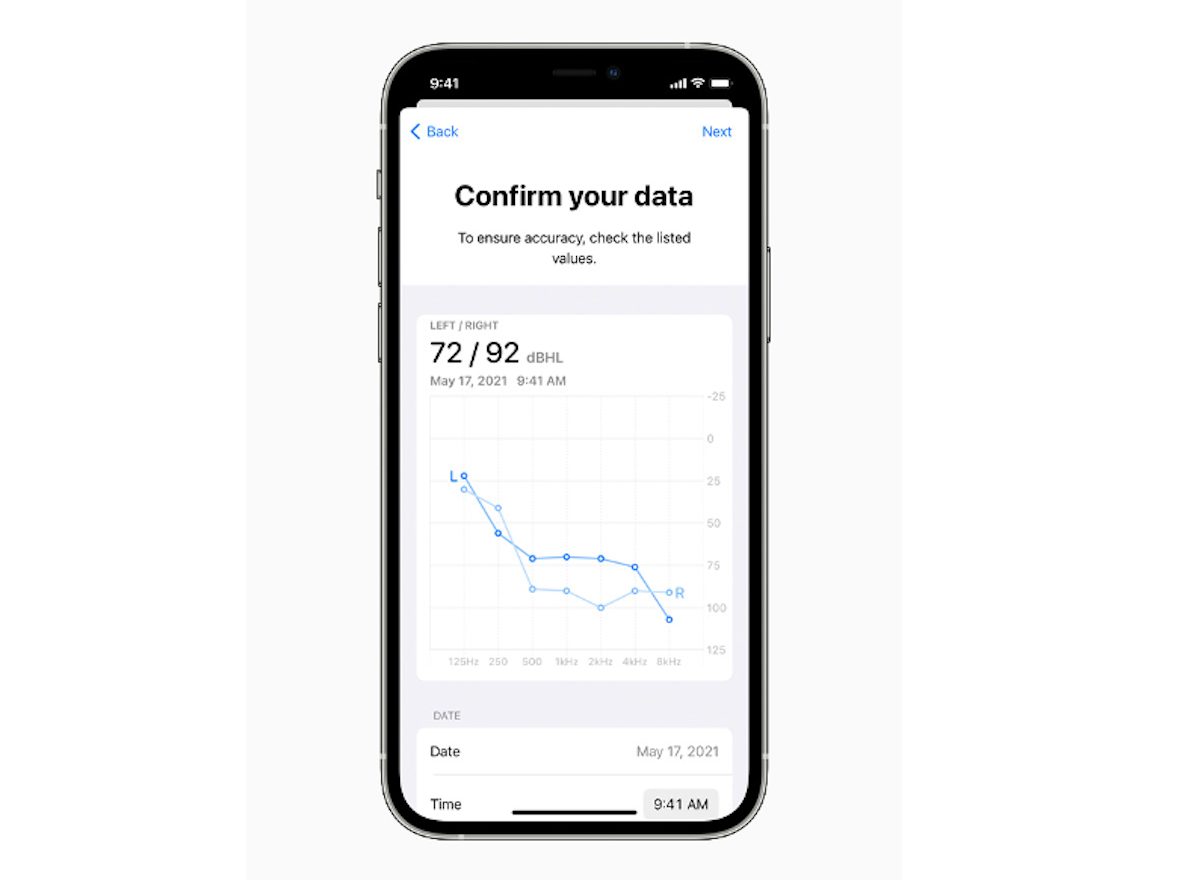

- Support for new bi-directional hearing aids and audiograms. The next-generation MFi hearing aids will allow users with hearing impairment to have hands-free phone and FaceTime calls. The company is also introducing support for recognizing audiograms (charts that show the results of a hearing test — to Headphone Accommodations for users to adjust their audio. “Users can quickly customize their audio with their latest hearing test results imported from a paper or PDF audiogram. Headphone Accommodations amplify soft sounds and adjust certain frequencies to suit a user’s hearing.”

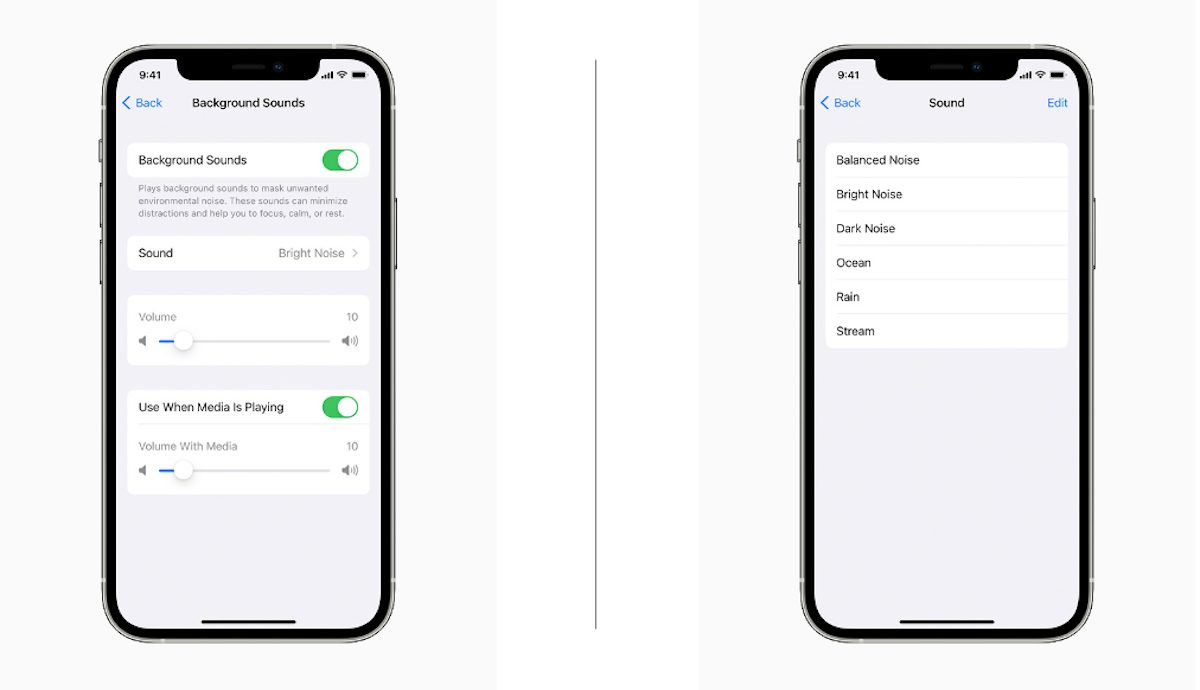

- New background sounds, in support of neurodiversity, will help to minimize everyday distracting and discomforting sounds. “Balanced, bright, or dark noise, as well as ocean, rain, or stream sounds continuously play in the background to mask unwanted environmental or external noise, and the sounds mix into or duck under other audio and system sounds.”

- Sound actions for switch control in place of physical buttons and replace switches with mouth sounds.

- Freedom to personalize display and text size settings for each compatible app

- New Memoji customizations with oxygen tubes, cochlear implants, and a soft helmet for headwear.

In addition, starting May 20, Apple is launching a new SignTime service to allow users to communicate with AppleCare and Retail Customer Care via sign language.

1 comment