The new Apple CSAM detection feature coming later this year will launch in the United States and the company has planned for its gradual rollout, worldwide.

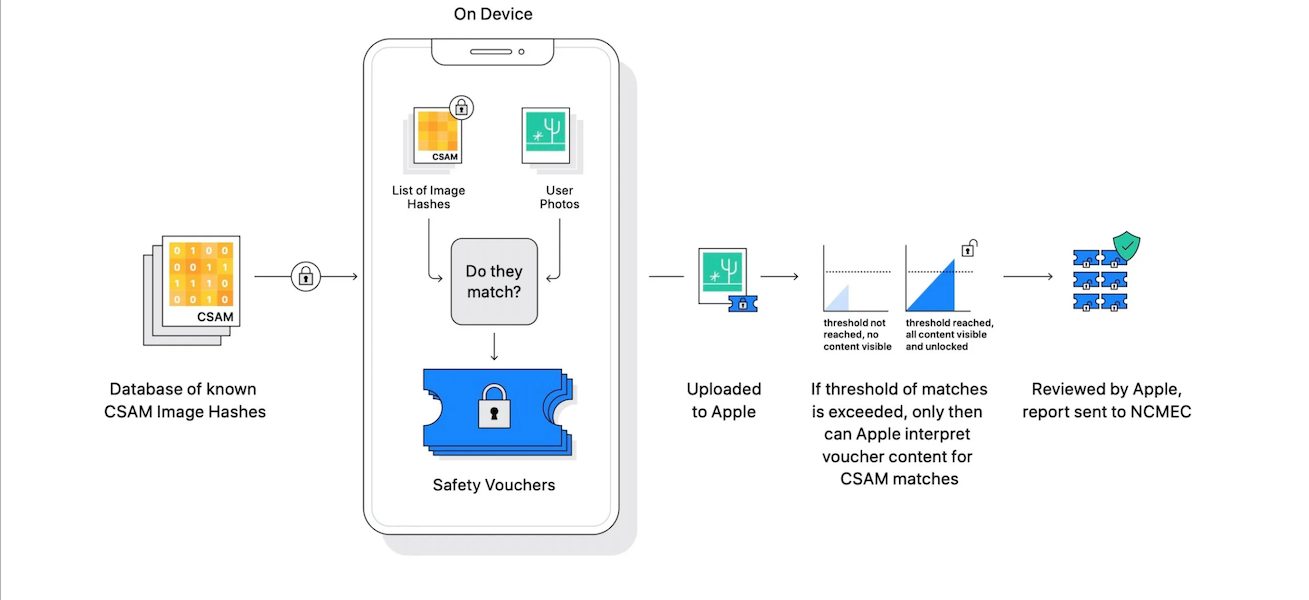

As part of the new Child Safety features, the upcoming Child Sexual Abuse Material (CSAM) detection feature will allow Apple to scan iCloud Photos using an on-device photo hashing system to match with a list of child abuse images provided by NCMEC. And when the matched images will be saved as safety vouchers will exceed a set threshold, the content will be eligible for human review, and perpetrators will be reported to the National Center for Missing and Exploited Children (NCMEC) or concerned law enforcement agencies.

The controversial Apple CSAM detection feature will launch in the United States in the Fall

The company announced that the new Apple CSAM detection and other child safety features will release in updates to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey coming out in Fall. Now the company has confirmed that the Expanded Protections for Children will launch in the U.S only and later will be released to other regions in the world, on a country-by-country basis. MacRumors writes:

Apple’s known CSAM detection system will be limited to the United States at launch, and to address the potential for some governments to try to abuse the system, Apple confirmed to MacRumors that the company will consider any potential global expansion of the system on a country-by-country basis after conducting a legal evaluation. Apple did not provide a timeframe for global expansion of the system if such a move ever happens.

Since the announcement, Apple CSAM detection and iMessage image analyzing systems have faced strong criticism. Cryptography and privacy expert argue that these features have created a back-door to Apple’s users’ end-to-end encryption privacy which will enable governments, especially authoritarian governments to spy on anyone; public, journalists, rival politicians, or dissent. Thus, Apple also explained how the scanning system could not be exploited by governments.

Apple also addressed the hypothetical possibility of a particular region in the world deciding to corrupt a safety organization in an attempt to abuse the system, noting that the system’s first layer of protection is an undisclosed threshold before a user is flagged for having inappropriate imagery. Even if the threshold is exceeded, Apple said its manual review process would serve as an additional barrier and confirm the absence of known CSAM imagery. Apple said it would ultimately not report the flagged user to NCMEC or law enforcement agencies and that the system would still be working exactly as designed.

However, the concerns are not sprung from the immediate use of new tools but their impact in the long term. Ex-NSA employees and privacy whistleblower Edward Snowden says that Apple has turned its devices into surveillance machines, “make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.” His assessment is backed by Electronic Frontier Foundation, Matthew Green, and others.

The hash function will leak out. And that will leave “the secrecy of NCMEC’s database” as the only remaining technical measure securing Apple’s encryption.

But I’m going to tell you a secret: the really bad guys already have that database.

— Matthew Green (@matthew_d_green) August 8, 2021

Read More:

1 comment