Privacy whistleblower, Edward Snowden warns that Apple’s improvident CSAM detection system is a “disaster-in-the-making” that will “permanently redefine what belongs to you, and what belongs to them.”

Snowden is an ex-computer intelligence consultant of the National Security Agency (NSA) in the United States. He came under the spotlight after he revealed global surveillance programs run by NSA and Five Eyes Intelligence Alliance with the cooperation of telecommunication companies and European governments. Although labeled a traitor, Snowden said that people have to be informed of what their governments were doing. Last year, he was exonerated when a U.S. federal court ruled that the U.S. intelligence’s mass surveillance program exposed by Snowden was “illegal and possibly unconstitutional.” Thus, when he says that Apple’s CSAM detection system will open a door into users’ privacy, that carries weight.

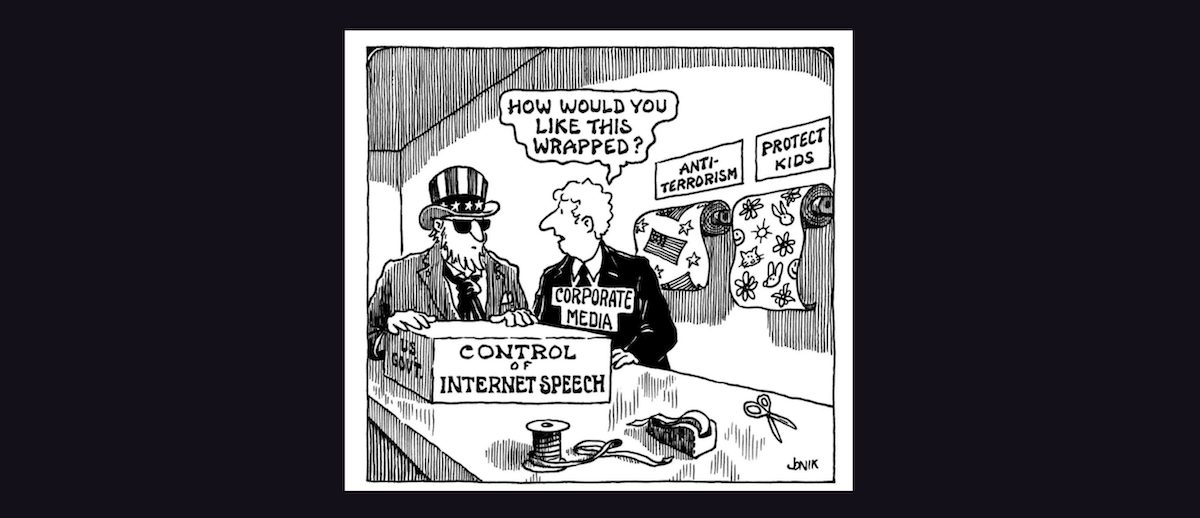

Apple is using child pornography as a decoy to take control of users’ devices

In his detailed article, Snowden intentionally does not give leverage to the CSAM detection system to prevent child pornography because he says that Apple does not really care about that. He highlights that predators can easily stop detection of their CSAM library by disabling an iCloud subscription. For Snowden, the ultimate endpoint is to gain access to users’ content.

If you’re an enterprising pedophile with a basement full of CSAM-tainted iPhones, Apple welcomes you to entirely exempt yourself from these scans by simply flipping the “Disable iCloud Photos” switch, a bypass which reveals that this system was never designed to protect children, as they would have you believe, but rather to protect their brand. As long as you keep that material off their servers, and so keep Apple out of the headlines, Apple doesn’t care.

That’s the way it always goes when someone of institutional significance launches a campaign to defend an indefensible intrusion into our private spaces. They make a mad dash to the supposed high ground, from which they speak in low, solemn tones about their moral mission before fervently invoking the dread spectre of the Four Horsemen of the Infopocalypse, warning that only a dubious amulet—or suspicious software update—can save us from the most threatening members of our species.

He believes that when the CSAM detection system will set a dark precedent that Apple itself will not be able to control, eventually erasing the boundary “dividing which devices work for you, and which devices work for them.” It may appear that Apple gets to decide what content is scanned on users’ devices but in reality, governments will get to decide “on what constitutes an infraction… and how to handle it.”

So what happens when, in a few years at the latest, a politician points that out, and—in order to protect the children—bills are passed in the legislature to prohibit this “Disable” bypass, effectively compelling Apple to scan photos that aren’t backed up to iCloud? What happens when a party in India demands they start scanning for memes associated with a separatist movement? What happens when the UK demands they scan for a library of terrorist imagery? How long do we have left before the iPhone in your pocket begins quietly filing reports about encountering “extremist” political material, or about your presence at a “civil disturbance”? Or simply about your iPhone’s possession of a video clip that contains, or maybe-or-maybe-not contains, a blurry image of a passer-by who resembles, according to an algorithm, “a person of interest”?

Frustrated by Apple’s shortsightedness and unwillingness to budge from the decision to release the scanning system when strong quarters are denouncing them, Snowden concludes that the upcoming ‘Expanded Protections for Children’ will lead to a dark future “one to be written in the blood of the political opposition of a hundred countries that will exploit this system to the hilt.”

We are bearing witness to the construction of an all-seeing-i—an Eye of Improvidence—under whose aegis every iPhone will search itself for whatever Apple wants, or for whatever Apple is directed to want. They are inventing a world in which every product you purchase owes its highest loyalty to someone other than its owner.

To put it bluntly, this is not an innovation but a tragedy, a disaster-in-the-making.

Or maybe I’m confused—or maybe I just think different.

2 comments