In the face of strong criticism, Apple has published a new FAQ document explaining that the new ‘CSAM detection’ photo scanning feature will not impact users’ private iPhone photo library.

Apple’s “Expanded Protections for Children” has been under a lot of scrutiny, since the announcement of the new child safety features, especially CSAM detection. The functionality of the Child Sexual Abuse Material (CSAM) detection allows Apple to scan users’ iCloud Photos to prevent the spread of CSAM.

By design, this feature only applies to photos that the user chooses to upload to iCloud Photos, and even then Apple only learns about accounts that are storing collections of known CSAM images, and only the images that match to known CSAM. The system does not work for users who have iCloud Photos disabled. This feature does not work on your private iPhone photo library on the device.

Apple confirms CSAM detection, on-device hashing system, will only match CSAM images and nothing else

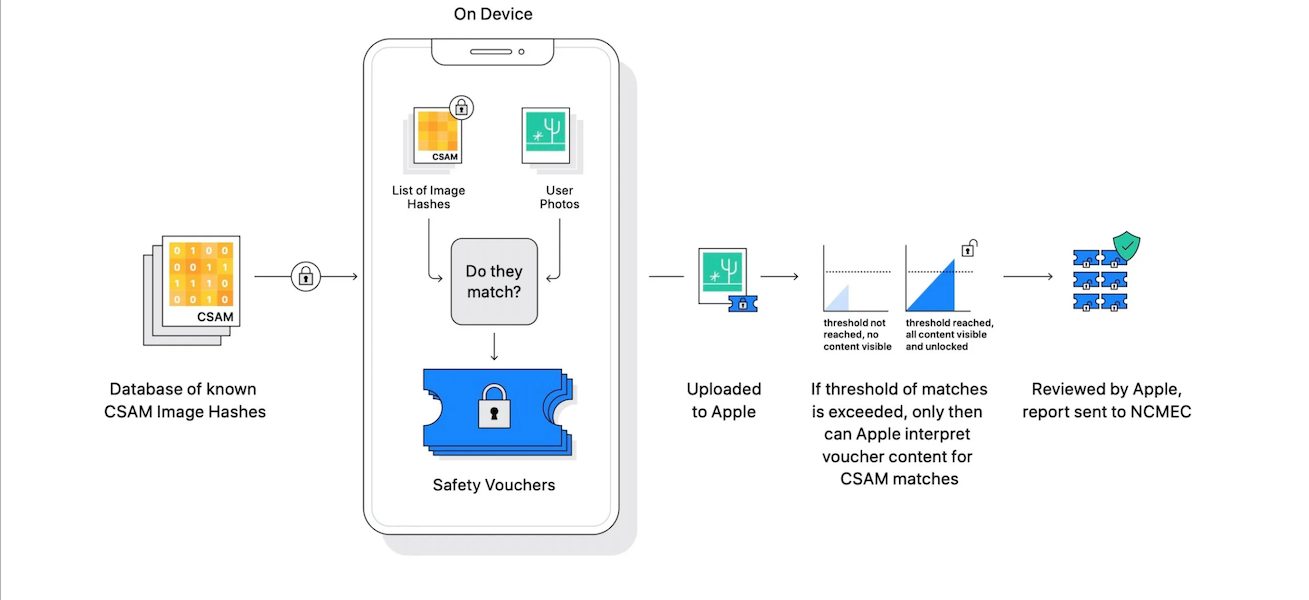

The company further details that the new client-side photo hashing system will not send or store CSAM images but rather save “unreadable hashes” representing known CSAM images provided by child safety organizations.

Using new applications of cryptography, Apple is able to use these hashes to learn only about iCloud Photos accounts that are storing collections of photos that match to these known CSAM images, and is then only able to learn about photos that are known CSAM, without learning about or seeing any other photos.

Users are also assured that the CSAM scanning system will not detect any other type of content in iCloud Photos.

CSAM detection for iCloud Photos is built so that the system only works with CSAM image hashes provided by NCMEC and other child safety organizations. This set of image hashes is based on images acquired and validated to be CSAM by child safety organizations. There is no automated reporting to law enforcement, and Apple conducts human review before making a report to NCMEC. As a result, the system is only designed to report photos that are known CSAM in iCloud Photos. In most countries, including the United States, simply possessing these images is a crime and Apple is obligated to report any instances we learn of to the appropriate authorities.

Not denying the need to protect children from predators and Child Sexual Abuse Material, cryptography and privacy experts are concerned about the long-term impact of photo scanning and messages analysis features. They argue that Apple has created a backdoor into users’ privacy which can be exploited by government agencies.

Read More:

- Apple CSAM detection feature to launch in the United States with a plan of global expansion

- Facebook-owned WhatsApp slams Apple’s Child Safety plan, says it will not adopt to CSAM plan

- The child safety ‘Messages analysis system’ will not break end-to-end encryption in Messages, says Apple

- Apple’s new automatic “CSAM detection” system will be disabled when iCloud is turned off

3 comments