The segments which usually praise Apple for its privacy protections for users, are now strongly criticizing its new ‘Expanded protection for Children’. Therefore, Apple’s VP of Software, Craig Federighi sat down with The Wall Street Journal to clarify the “misunderstanding” surrounding the implementation, impact, and the “backdoor” characterization of the new child safety features.

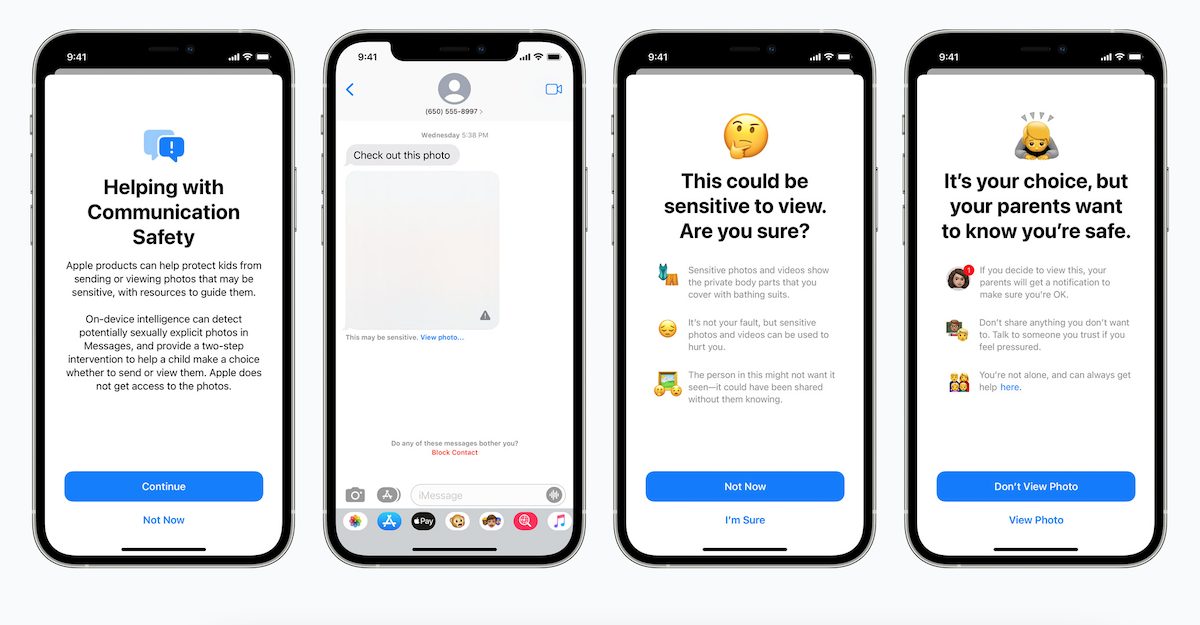

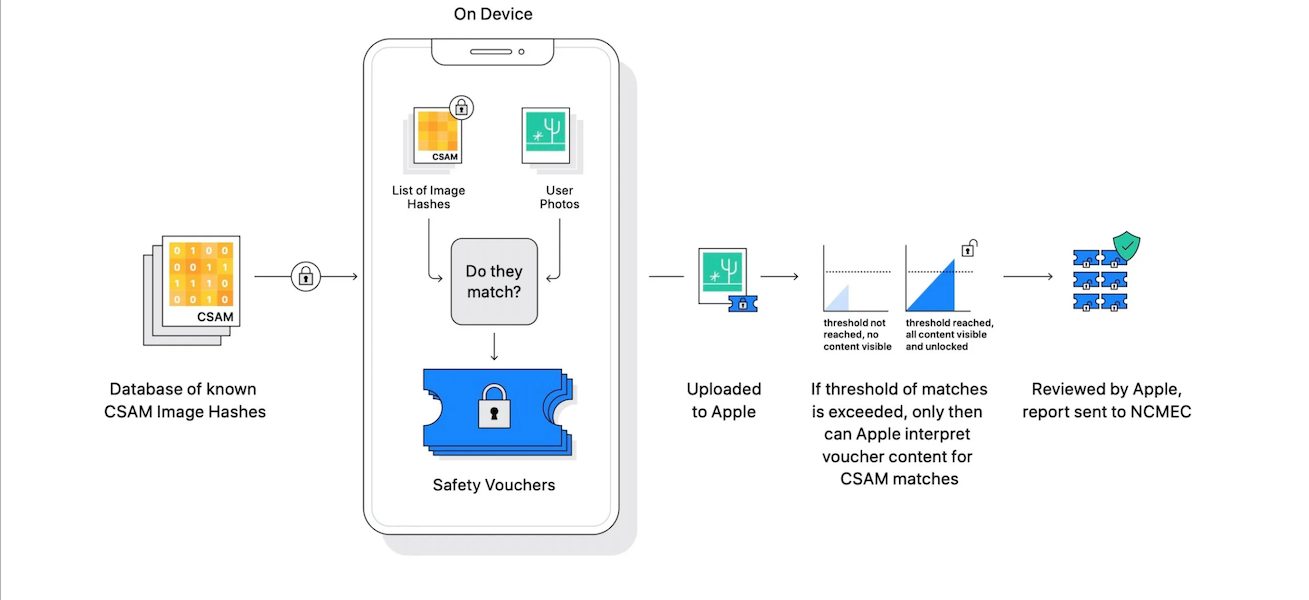

In Fall, the company will launch the controversial CSAM detection system to prevent the spread of child pornography and Communicate Safety in Messages feature to discourage children (12 years or under) from receiving, sharing, and viewing sexually explicit content. In addition, Siri and Search will be updated to warn the users when CSAM is searched for.

Federighi says Apple’s CSAM detection and Communicate Safety in Messages systems function in the most auditable and verifiable way possible

Distinguishing between the CSAM detection and Communicate Safety in Messages systems, Federighi confessed that the company wishes that the features had been announced with more clarity to nip the confusion in the bud. In the video interview on WSJ’s Youtube channel, he said.

We wish that this had come out a little more clearly, for everyone because we feel very positive and strongly about what we’re doing, and we can see that it’s been widely misunderstood.

He reiterated that Apple will not be scanning users’ private photos and reading their messages; because CSAM based on a neural hashing system will only work “in the pipeline” of transferring images from users iPhones to iCloud and the Communication Safety in Message will come into action when parents activate the feature for their children’s safety.

Addressing the concern of breaching users privacy by creating a backdoor to scan and analysis their data, he said:

I really don’t understand that characterization. Well, who knows what’s being scanned for? In our case, the database is shipped on device. People can see, and it’s a single image across all countries. We ship the same software in China with the same database as we ship in America, as we ship in Europe.

If someone were to come to Apple, Apple would say no, but let’s say you aren’t confident. you don’t want to just rely on Apple saying no. You want to be sure that Apple couldn’t get away with it if we said yes. Well, that was the bar we set for ourselves in releasing this kind of system.

There are mutiple levels of auditability, most privacy-protecting way we can imagine, and verfiable way possible. So we’re making sure that you don’t have to trust any one entity or even any one country, as far as how these images are and what images are part of the process.

But the interview failed to address the possible exploitation of the features by government agencies in the long-term which the experts and the company’s employees are highlighting.

6 comments