To answer security and privacy concerns related to new child safety features, Apple’s head of Privacy, Erik Neuenschwander sat down with Tech Crunch’s Matthew Panzarino. In the detailed interview, Neuenschwander explained the functionality of CSAM detection and Communication Safety in Messages systems to protect children from online predators and limit the chances of grooming.

He also addressed the critics’ strong fear that the upcoming scanning and analysis systems will create a backdoor allowing governments to exploit the system to spy on particular users.

Apple’s CSAM detection system is designed in such a way that it will make it very difficult to access non-CSAM data

Although the announcement of CSAM detection and Communication Safety in Messages systems together has created a lot of confusion, Erik Neuenschwander said that all three features of ‘Expanded Protections for Children’ are designed to achieve common goals, children’s safety and prevent dissemination of CSAM. Thus, they had to announce them together.

CSAM detection means that there’s already known CSAM that has been through the reporting process, and is being shared widely re-victimizing children on top of the abuse that had to happen to create that material in the first place, for the creator of that material in the first place.

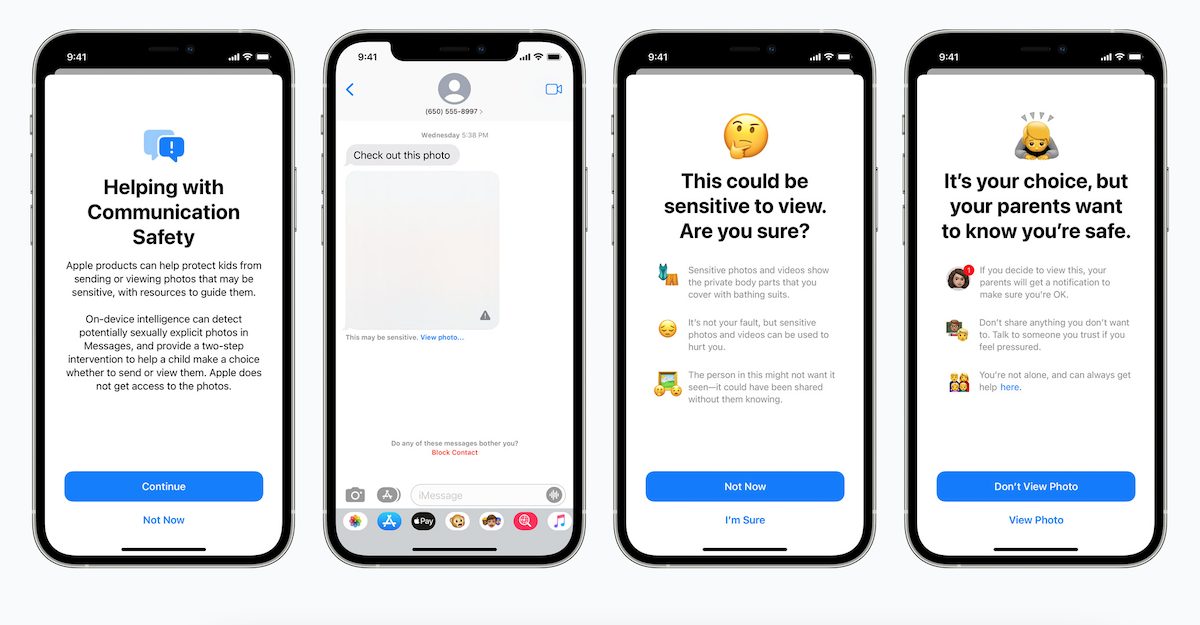

And so to do that, I think is an important step, but it is also important to do things to intervene earlier on when people are beginning to enter into this problematic and harmful area, or if there are already abusers trying to groom or to bring children into situations where abuse can take place, and Communication Safety in Messages and our interventions in Siri and search actually strike at those parts of the process. So we’re really trying to disrupt the cycles that lead to CSAM that then ultimately might get detected by our system.

Addressing the controversial aspect of CSAM hashing system to target specific users, Neuenschwander said that if Apple is pressurized by a certain government to match other type of content, the company has built multiple layers of protection which will such requests for non-CSAM fingerprinting not very useful.

Well first, that is launching only for U.S., iCloud accounts, and so the hypotheticals seem to bring up generic countries or other countries that aren’t the U.S. when they speak in that way, and therefore it seems to be the case that people agree U.S. law doesn’t offer these kinds of capabilities to our government.

But even in the case where we’re talking about some attempt to change the system, it has a number of protections built in that make it not very useful for trying to identify individuals holding specifically objectionable images.

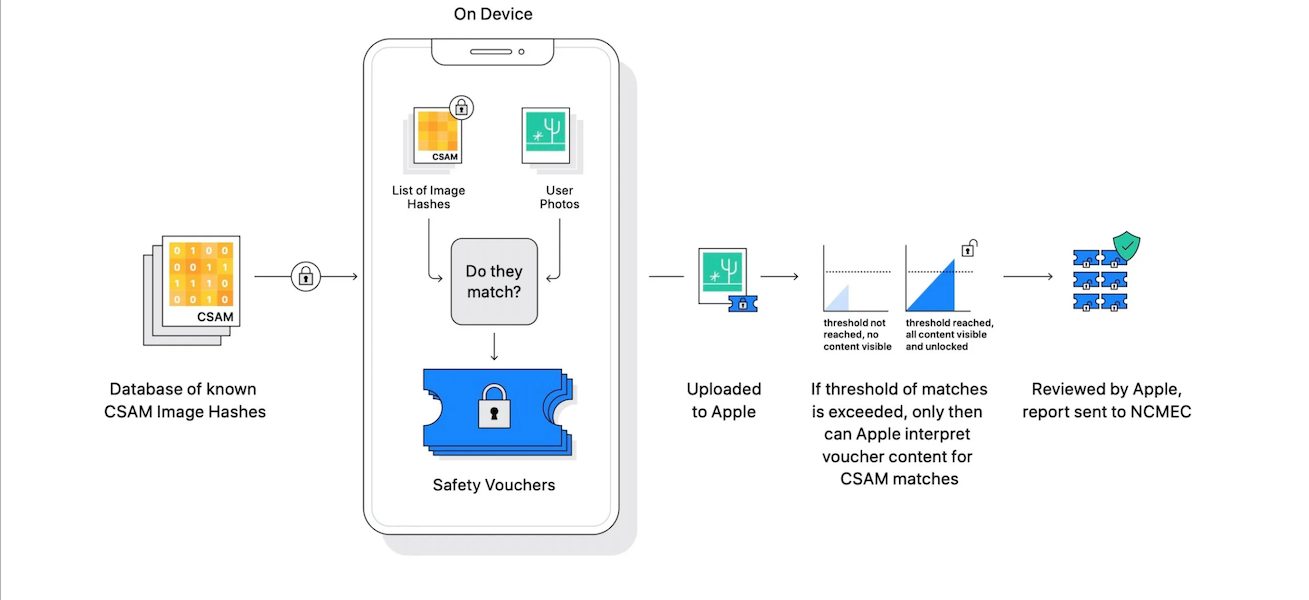

- The hash list is built into the operating system, we have one global operating system and don’t have the ability to target updates to individual users and so hash lists will be shared by all users when the system is enabled.

- And secondly, the system requires the threshold of images to be exceeded so trying to seek out even a single image from a person’s device or set of people’s devices won’t work because the system simply does not provide any knowledge to Apple for single photos stored in our service.

- And then, thirdly, the system has built into it a stage of manual review where, if an account is flagged with a collection of illegal CSAM material, an Apple team will review that to make sure that it is a correct match of illegal CSAM material prior to making any referral to any external entity. And so the hypothetical requires jumping over a lot of hoops, including having Apple change its internal process to refer material that is not illegal, like known CSAM and that we don’t believe that there’s a basis on which people will be able to make that request in the U.S. And the last point that I would just add is that it does still preserve user choice, if a user does not like this kind of functionality, they can choose not to use iCloud Photos and if iCloud Photos is not enabled no part of the system is functional.

Furthermore, discussing a scenario when users device is physically compromised, he said that it will rare and expensive attack which will render the attacker very little at the end.

I think it’s important to underscore how very challenging and expensive and rare this is. It’s not a practical concern for most users, though it’s one we take very seriously, because the protection of data on the device is paramount for us. And so if we engage in the hypothetical, where we say that there has been an attack on someone’s device: that is such a powerful attack that there are many things that that attacker could attempt to do to that user. There’s a lot of a user’s data that they could potentially get access to. And the idea that the most valuable thing that an attacker — who’s undergone such an extremely difficult action as breaching someone’s device — was that they would want to trigger a manual review of an account doesn’t make much sense.

Because, let’s remember, even if the threshold is met, and we have some vouchers that are decrypted by Apple, the next stage is a manual review to determine if that account should be referred to NCMEC or not, and that is something that we want to only occur in cases where it’s a legitimate high-value report. We’ve designed the system in that way, but if we consider the attack scenario you brought up, I think that’s not a very compelling outcome to an attacker.

Neuenschwander explained that CSAM scanning will only kick in for iCloud Photos users and analysis for children’s accounts when parents will enable the Communication Safety in Messages feature. Therefore, users’ privacy will remain intact as before. However, the privacy of users involved in illegal CSAM activities will be affected.

We have two co-equal goals here. One is to improve child safety on the platform and the second is to preserve user privacy.

Now, why to do it is because this is something that will provide that detection capability while preserving user privacy. We’re motivated by the need to do more for child safety across the digital ecosystem, and all three of our features, I think, take very positive steps in that direction. At the same time we’re going to leave privacy undisturbed for everyone not engaged in the illegal activity.

Read More:

- Apple’s Craig Federighi defends the new ‘Expanded Protections for Children’ saying they are not a backdoor

- Apple’s “Expanded Protections for Children” worry employees of their exploitation by repressive regimes

- The child safety ‘Messages analysis system’ will not break end-to-end encryption in Messages, says Apple

- The new CSAM detection feature will not scan user’ private iPhone photo library, says Apple

- Apple might crack down on child abuse images via a new client-side photo hashing system

1 comment