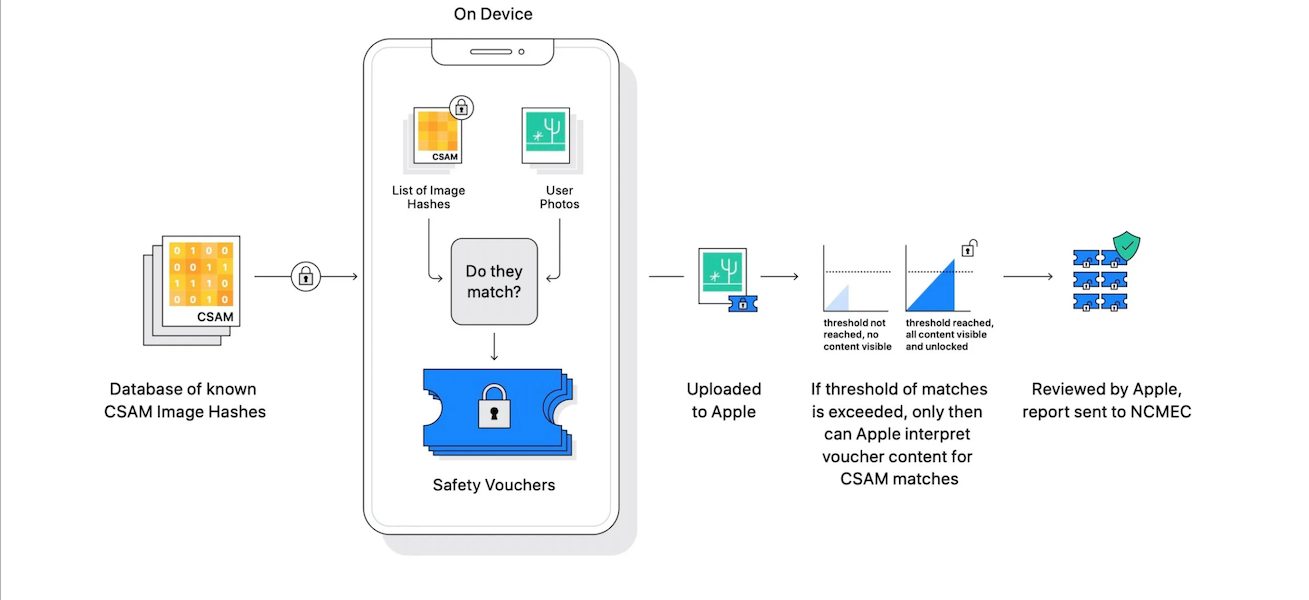

Apple recently unveiled new Child Safety features which give the company power to analyze iMessages for sexually explicit images, scan iCloud Photos to detect CSAM content, and update Siri and Search to warn, intervene and guide children when searching for CSAM related topics.

Since the tech giant announced its new Child Safety measures, many companies, technologists, academics, and policy advocates have come out against the plan. In an open letter, which has garnered more than 4,000 signatures, the tech giant has been asked to “reconsider its technology rollout.”

WhatsApp says it will not adopt Apple’s Child Safety plan

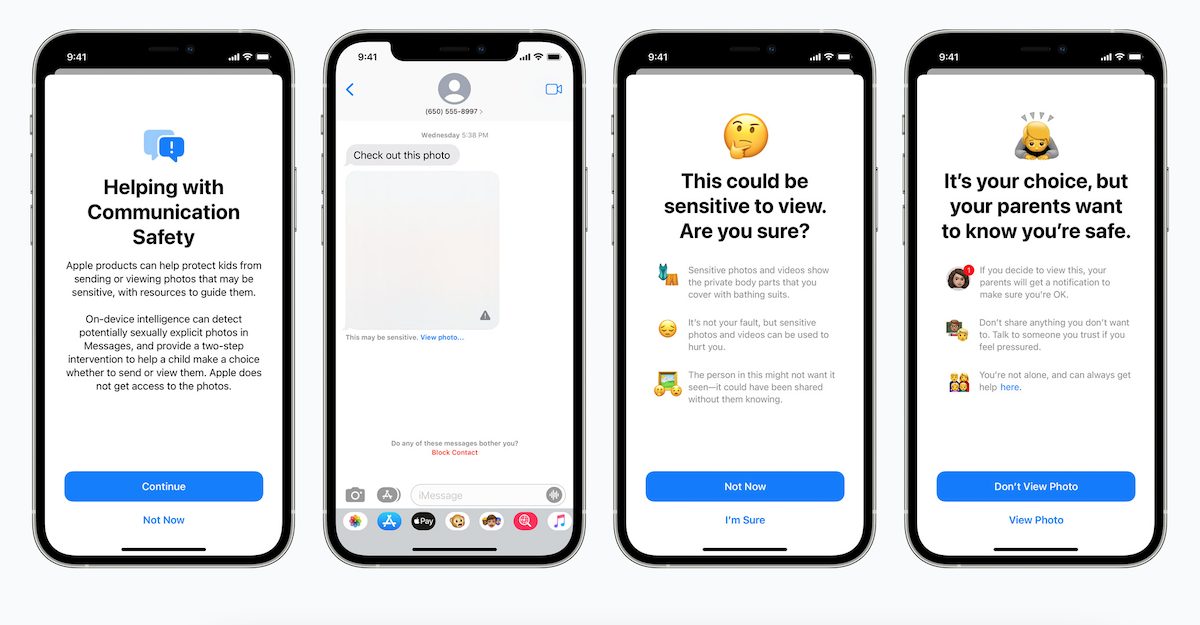

The upcoming Communication Safety in Messages will introduce new protection tools to warn children when receiving and sending sexually explicit photos. The feature will also notify parents when a child views or sends such material. An on-device machine learning system will scan shared media in messages to detect and blur sexually explicit photos. In addition, the child will be “warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo.”

In a Twitter thread, WhatsApp’s head Will Cathcart said that his company will not be adopting the safety measures calling Apple’s approach “very concerning.” Cathcart said WhatsApp’s defense against child exploitation with relies on user reports had led to the company reporting over 400,000 cases to the National Center for Missing and Exploited Children in 2020.

Cathcart says Apple’s plan is “the wrong approach and a setback for people’s privacy all over the world.” He went on to say that the Cupertino tech giant “has long needed to do more to fight CSAM”, however, he maintains his position on the matter and criticized Apple’s approach:

Instead of focusing on making it easy for people to report content that’s shared with them, Apple has built software that can scan all the private photos on your phone — even photos you haven’t shared with anyone. That’s not privacy.

Cathcart describes the plan as “an Apple-built and operated surveillance system that could very easily be used to scan private content for anything they or a government decides it wants to control.” He also claims that “countries, where iPhones are sold, will have different definitions on what is acceptable.”

Read more:

3 comments